AI is changing UX, augmenting but not replacing designers

I’ve been working with AI for a while, sometimes It gives me really good stuff and some others I wanna send my laptop to the trash, neither way, here’s a short answer: good for research studies (good, not great, not excellent), nice for wireframing, and great for some kind of analytics conclusions. Unfortunately, it can also push teams toward look-alike interfaces and shallow decisions if you don’t rely on your own judgment to question AI outputs.

I use AI daily to draft personas, convert paper sketches into low-fi wireframes, export Google Analytics docs to have preliminary quick insights or even to some basic heuristic analysis. But no matter what, I always end up customizing and changing everything.

Our goal as UX designers HAS NOT changed: empathy, creativity, and ethical judgment is still part of our job and what make us human.

For me is simple: treat AI as a tool, be the orchestrator, and keep users at the center. My question for you is:

can AI actually understand complex human behavior/emotions?

What “AI in UX” really means in 2025 (and what it doesn’t)

I think AI touch three different points in UX

- Generative design support: writing, UI suggestions, wireframe starters, content variations, microcopy drafts.

- Decision support: summarizing qualitative research, clustering feedback, finding anomalies in event data, and early predictive signals.

- Experience delivery: personalization, recommendations, smart assistance, accessibility boosters (e.g., alt-text drafts, captions).

In short, AI deliverables are mainly drafts and by the time I’m writing this article, AI hasn’t being able to replace product thinking. I’ve tried “design from scratch” features and I can say they are useful to kick off but not to finish. AI Models tend to remix what already exists, so if you are looking for innovation you will have to watch again “Rugrats” Tommy’s father (notice my 90’s reference :D)

How I explain it to stakeholders: AI shrinks the time from question to a credible draft. It doesn’t shrink the time needed for validation, ethical review, or crafting a design that earns trust.

Where AI helps most today — how has ai been affecting ux design in practice

User research at scale

I add interview notes or survey comments into chatgpt so It can cluster themes for me and surface contradictions. It’s fast at “first-pass sense-making,” especially when I ask it to list evidence for and against a hypothesis. Buuuut, I still have to run a human pass to check for bias and missing voices, neither way I save hours of tedious sorting.

Low-fi to wireframe

This is my favorite: I sketch on paper, take a photo with my phone, and ask gemini to produce a basic wireframe that respects my hierarchy. I’ve done this with general LLMs and, with Figma’s AI features it’s a little bit complex, you have to give it a good prompt in order to work; both give me a bare-bones layout that I then refine, the benefit with Figma is that I can edit it. The key is starting from your own structure, not a model’s generic template.

If you ask me how this will escalate in the near future, I think we are moving into a mid fidelity wireframing, AI tools will be able to create more accurate wireframes based on our prompts.

Fast user test – AI feedback

For usability sessions, AI helps me condense all my notes into findings sort by task, quotes tagged by severity, and recommendations. But as AI can be biased, I always ask for a counter example so I don’t get a one sided story.

I take all this as a fast draft and not my final report, it helps me dealing with the blank page.

Time saving AI analysis

I export Google Analytics (or product event data) to Sheets/CSV and drop it into an AI assistant to generate hypotheses: “Which paths correlate with abandonment?” “What changed week over week after a release?” In more than minutes I can surface “hidden patterns,” then I verify with proper segmentation and sanity checks.

Accessibility boosters

AI is also nice for drafting alt text, summarizing long content, and proposing reading-level adjustments. Neither way I still do manual checks and of course where possible my recommendation is to do usability testing with assistive tech users because they are the final users.

Workflow examples you can copy (personas, paper-to-wireframe, GA→Sheets→Insights)

Persona draft prompts — how has ai been affecting ux design for research

AI is usually good for drafting personas and it can speed up your workflow, but you have to customize it with real data if you don’t want to end up having the same persona than Walmart.

Prompt pattern:

“Create 3 provisional personas for (whatever you need) for this specific audience: (explain a bit your audience). Use only the following documents as source of truth (upload research docs, transcriptions or online links). For each persona draft: goals, pain points, context of use, success metrics, and accessibility considerations. Flag assumptions you made. Also, please add 5 interview questions I should ask to validate.”

I always end up rewriting names, challenging stereotypes, and creating real quotes. After all the benefit here is more time to focus in other stuff, remember, responsibility is always yours.

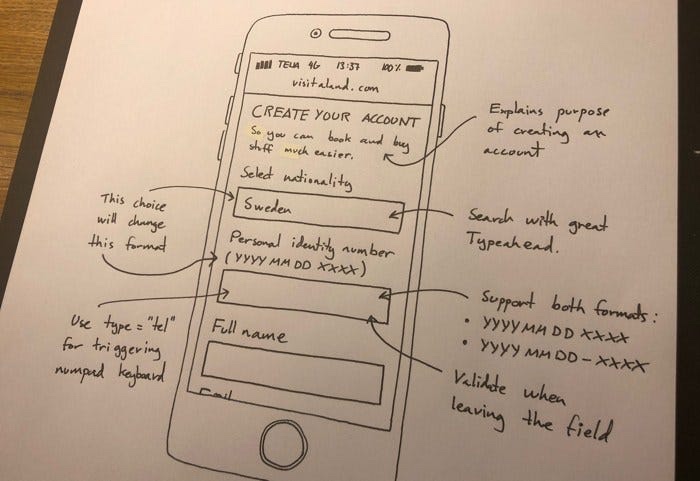

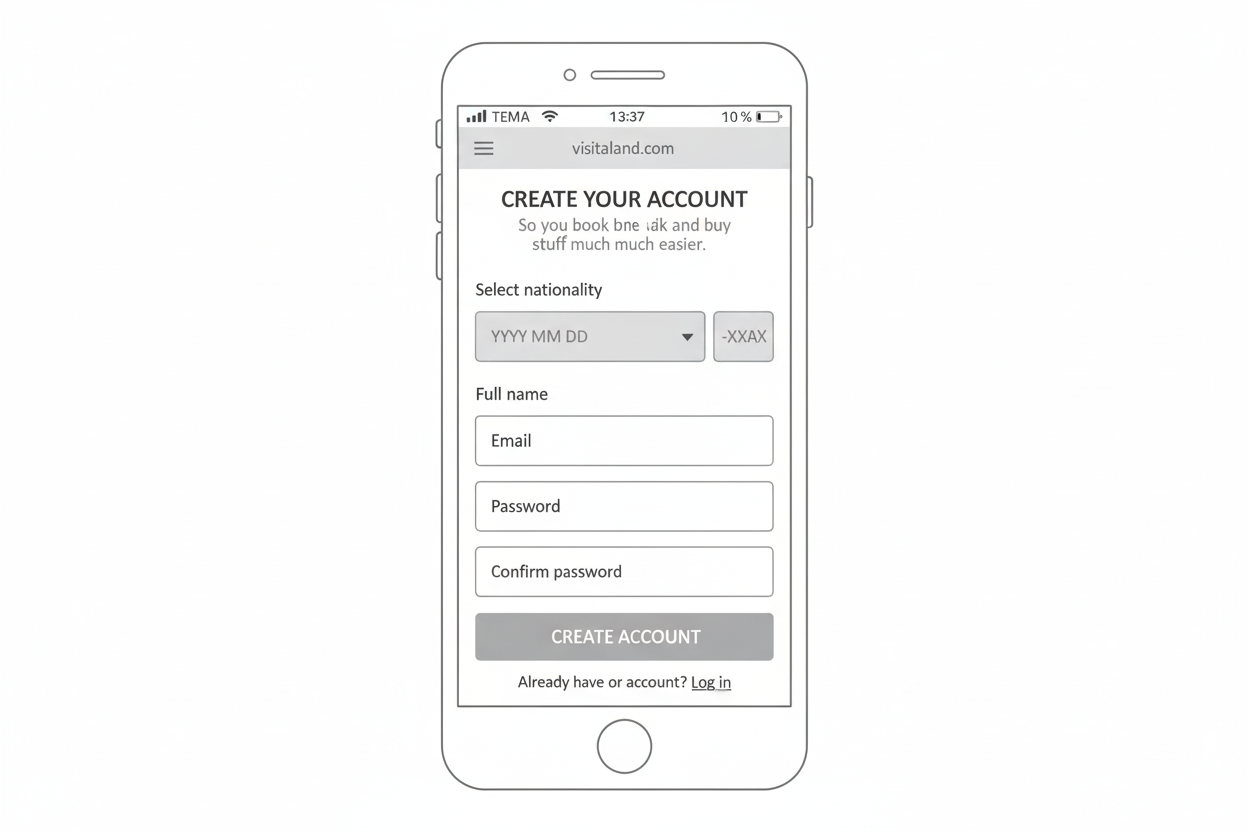

Paper sketch → AI wireframe → human iteration

This is specially useful If you need a generate ideas faster, e.g. a crazy 8’s session with your team (online)

- Paper sketch with labeled sections and priority.

- Google Gemini Prompt (faster with nano banana): “Convert this sketch to low-fi wireframe” Result:

- Upload to figjam and start voting

Paper sketch:

Result:

GA exports → quick wins

- Export key events and funnel data to Sheets.

- CHATGPT or Kimi: “Find segments with abnormal drop offs in the last 30 days; propose 3 hypotheses each; list needed follow-up analyses.”

- Validate with filters, compare against control periods, and check for tracking glitches.

- Turn only the validated insights into backlog items.

Be careful, due to models context window, AI tools not only do a good job in this task, so you can have to keep an eye on insights provided all the time.

Risks to look: hallucinations, bias, and the great UI look-alike problem

I witness how AI can reinforce stereotypes and start hallucinating from time to time. Is our responsibility as designers to not accept model defaults, to personalize your own experience with the model becasue homogenization is our antagonist and have the biggest risk to UX teams. ¿Interfaces that all look and feel the same? no thanks…

Anti-homogenization checklist

- Before even start prompting plan your own narrative (jobs to be done, constraints, brand voice).

- Try to search and look the best model for the task you want to accomplish.

- Force model “creativity”: ask for 3–5 distinct patterns and choose intentionally.

- Ban generic copy: replace placeholder microcopy with real voice and domain terms.

- Remember to all have a periodic bias audit: sample outputs for stereotypes, exclusionary language, and skewed defaults.

If my designs begin to “blend in” and AI start affecting the experience, I try to stop, take a rest and revisit my research artifacts because as designers we can get overwhelmed and start seeing good stuff where it isn’t

The UX Orchestrator: skills to build (AI literacy, prompts, data sense, ethics)

I know this will be hard to believe but our future is shifting from pure maker to orchestrator, AI will become incredibly good at technical stuff but AI will always need us for guidance for more integrated outputs, and to be the intermediary between business and users (as it is today). As you can see we are adding a new actor, the “AI” designer. To deal with all of this I’m actively cultivating the following skills:

- AI literacy: what the tools can and can’t do, and when not to use them.

- Prompt craft: I’m learning to build good prompt structures, constraints, and to refine outputs; trying to turn vague requests into valuable outputs.

- Data interpretation: To read the data with judgement and a little bit of logic, spot what might be skewing it, and know when a pattern you see is just noise, not a real connection.

- Ethics & safety:Learning to reduce bias helps you spot the most subtle ones in AI outputs. We also need to understand the implications of using AI directly on results and on the user, and how we can reduce risks and errors that affect the experience.

- Facilitation: Learning to guide cross-functional teams so they can evaluate AI outputs critically, challenge assumptions, and turn feedback into concrete design decisions, how? Through UX workshops, team meetings, and daily check-ins.

- System thinking: Develop the ability to see the product as a whole: set boundaries so AI-powered features integrate coherently, ensure consistency between them instead of scattered functions without a unified voice, and aim to avoid inconsistent experiences while maintaining a unity across all user journeys.

Finally and In practice, I “talk” to models like junior collaborators: set context, assign roles, ask for alternatives, demand sources, and always validate.

Tooling snapshot: Figma AI, ChatGPT/Gemini, Uizard, Hotjar/Qualtrics AI (when to use what)

Here is a list of tools so you can have a snapshot of AI tools that I use and why:

| Use case | Good starting tool(s) | Why it helps | What I still do manually |

|---|---|---|---|

| Persona first drafts | ChatGPT/Gemini | Fast structure from messy notes | Remove stereotypes, add quotes, validate with users |

| Paper→low-fi wireframe | Figma AI / Uizard / LLM + plugin | Converts hierarchy to layout quickly | Fix information priority, adjust flows, content strategy |

| Microcopy variations | LLMs + style guide | Generates voice-consistent options | Final tone, legal/compliance, localization |

| Research synthesis | LLMs with transcripts | Clusters themes, finds contradictions | Re-read critical quotes, check sampling |

| Analytics triage | GA → Sheets → LLM | Surfaces anomalies, suggests hypotheses | Verify segmentation, instrument events properly |

| Accessibility boosts | LLMs for alt text, summaries | Drafts faster | Manual testing with assistive-tech users |

I treat all of the above as assistive, never authoritative.

Measuring Impact (and Why It Matters)

This subject is important because AI integrated in UX isn’t just about speed—it’s about proving real value. That’s why measurement matters: it keeps AI from being just a shiny toy.

So… my suggest, just measure AI:

What to track

- Efficiency: How much time you save on repetitive tasks (summaries, first wireframes, copy drafts). Always compare before/after.

- Effectiveness: Does AI actually help users succeed? Check usability tests: task completion, error rates, time on task.

- Experience: Look at user sentiment (CSAT/NPS) where AI-driven changes go live. Are people happier, more confident, or more frustrated?

Why This Framing Adds Value.

If AI saves hours but doesn’t move success rates, that’s operational efficiency, not user value. Both are worth reporting, but don’t confuse one for the other.

This is what really matters to stakeholders: are we making the team faster, the product better, or both?

A Simple Hypothesis Framework

- Hypothesis: “(ai name or tool or integration) (activity) will reduce (time) by X% without hurting (task success).”

- Evidence: Baseline vs. after-AI numbers, with sample size and caveats.

- Decision: Scale up if it works, tweak if mixed, or drop if it fails.

This way, AI measurement becomes part of product strategy in case you haven’t even considered.

Implementation Playbook: how to actually make it work

Prompts you can steal (and adapt)

- Research synthesis → “Group these interview notes into 4–6 themes. Show me quotes for each theme, and also what doesn’t fit or is still unknown.”

- Wireframe from sketch → “Turn this sketch into a mobile-first wireframe. Keep sections A, B, C in priority. Make buttons at least 44px. Export as components I can move in Figma.”

- Microcopy → “Give me 5 button label options in our brand voice. Avoid jargon. Explain why each works and check accessibility contrast.”

- Analytics triage → “From this CSV, flag the top 3 drop-off points this week. Suggest 3 possible reasons for each and the simplest test to confirm.”

Think of these as starter packs—you copy, tweak, and iterate. They save you from blank-page syndrome, not from thinking.

Safeguards (a.k.a. don’t skip this part)

- Always ask AI for its assumptions or sources.

- Check personas and copy for hidden bias.

- Have a rule of “AI off” for sensitive stuff (like consent language or legal text).

Human-in-the-loop (non-negotiable)

- A designer reviews every AI draft.

- PM or legal looks at anything sensitive.

- Researchers validate claims with real users whenever possible.

Because at the end of the day, AI can speed you up, but it can’t own the responsibility, you do.

The road ahead: keeping empathy, creativity, and judgment at the center

I’ve heard the “AI will replace designers” line for years. I don’t buy it. The best work I admire—across product and brand—still comes from human imagination guided by real empathy. AI can shorten the path to a competent draft; it can’t care, it can’t weigh trade-offs with moral judgment, and it doesn’t own the outcomes with users. That’s ultimately how has ai been affecting ux design: it elevates the mundane, but the magic still belongs to us Humans.

Use AI to save time and automate the repetitive bits, prepare to orchestrate, and double down on the human skills that actually differentiate our craft.

FAQs

Will AI replace UX designers?

Nope. It handles repetitive tasks but can’t replicate human empathy, creativity, or ethical judgment.

How do I keep designs from looking the same when I use AI?

Start with your user research, ask for varied options, and customize with your brand’s voice. Test against human designs.

Can I generate a full interface from scratch?

It can give you a starting point, but great designs need human refinement and user testing.

How can I use AI with Google Analytics or Sheets?

Export data to a spreadsheet, ask AI to spot trends or drop-offs, then verify with proper checks before acting.

What new skills should I build?

Focus on AI literacy, prompt crafting, data analysis, ethics, and team facilitation, alongside core UX skills.

Leave a Reply