I’ll never forget the day I convinced my team to skip user research because “we already know what users want.” Spoiler alert: we didn’t. After launching a feature that literally nobody used, we wasted three months of development. Additionally, I had to sit through the most uncomfortable stakeholder meeting of my career. That expensive mistake taught me something invaluable – choosing the right research method at the right time isn’t just nice to have, it’s the difference between building something people love and building something that collects dust.

Here’s the thing though: UX research in 2025 looks nothing like it did even two years ago. Yes, the fundamentals haven’t changed – we still need to understand users, validate ideas, and iterate based on real feedback. However, AI has been affecting UX design in ways that make research faster, deeper, and honestly, way more accessible to teams without massive budgets.

This cheat sheet is what I wish I had when I was starting out: a no-nonsense guide to which research method to use when, plus AI prompts that’ll help you analyze results without spending weeks drowning in data. Whether you’re a solo designer at a startup or part of a UI/UX design agency, this is your practical roadmap.

TL;DR: There are 20+ core UX research methods organized across four key phases (Discover, Explore, Test, Monitor). Use qualitative methods when you need to understand why users behave a certain way, quantitative when you need to measure what they’re doing at scale, and always combine both for the complete picture. AI can now speed up analysis by 60-70%, but never skip the human interpretation step.

When to Use Which User Experience Research Methods

Let me tell you about the time I ran a usability test on a prototype that nobody wanted. The test went great – users could complete tasks, the interface was intuitive, everything checked out. But we still bombed at launch because we skipped the discovery research that would’ve told us we were solving the wrong problem entirely.

The phase you’re in determines your method. Period. Not your budget, not your timeline (though those matter), but where you are in the product lifecycle. Here’s how I think about it after screwing this up enough times to finally get it right:

The Four Research Phases and When to Deploy Them

Discover Phase

This is your “do we even need this?” stage. During this phase, you’re validating assumptions, understanding the problem space, and figuring out if there’s actually a problem worth solving. Skip this phase and you’re building on quicksand. I use discovery methods when:

- Starting any new project or feature

- Stakeholders are making big assumptions about users

- Something’s broken but nobody knows why

- You’re entering a new market or user segment

Explore Phase

Now you’re in “how might we solve this?” territory. At this point, you’ve validated the problem, and you’re figuring out the right solution approach. This is where creativity meets constraints. I lean on exploration methods when:

- Multiple solution paths exist and we need to pick one

- Designing something novel without clear patterns

- Iterating on early concepts and wireframes

- Building information architecture from scratch

Test Phase

The “does this actually work?” checkpoint. By now, you have designs, maybe even working prototypes, and you need to validate that they solve the problem without creating new ones. Testing happens when:

- You have something concrete to put in front of users

- Development is about to start (or has started)

- You need evidence for stakeholders to proceed

- Accessibility compliance needs verification

Monitor Phase

Your “how’s it performing in the wild?” continuous improvement cycle. At this stage, the product is live, and you’re tracking real behavior to find optimization opportunities. Monitoring kicks in when:

- The feature/product is in production

- You need baseline metrics for future improvements

- Users are reporting issues you need to prioritize

- You’re preparing data for your next iteration

| Research Phase | Primary Goal | When to Use | Key Methods | Typical Timeline |

|---|---|---|---|---|

| Discover | Validate the problem worth solving | Before any design work | User interviews, field studies, stakeholder interviews, analytics review | 2-4 weeks |

| Explore | Generate and evaluate solution approaches | After problem validation, before high-fidelity design | Card sorting, paper prototypes, journey mapping, competitive analysis | 3-6 weeks |

| Test | Validate designs work as intended | When you have testable designs or prototypes | Usability testing, accessibility audits, surveys, A/B tests | 1-3 weeks per cycle |

| Monitor | Measure real-world performance and identify improvements | Post-launch, continuously | Analytics, heatmaps, support ticket analysis, user feedback | Ongoing |

Here’s what trips people up: they think these phases happen sequentially, like steps on a ladder. In reality, they’re more like a spiral. You discover something during testing that sends you back to explore. You monitor something in production that triggers new discovery research. The best teams I’ve worked with treat this as a continuous cycle, not a waterfall.

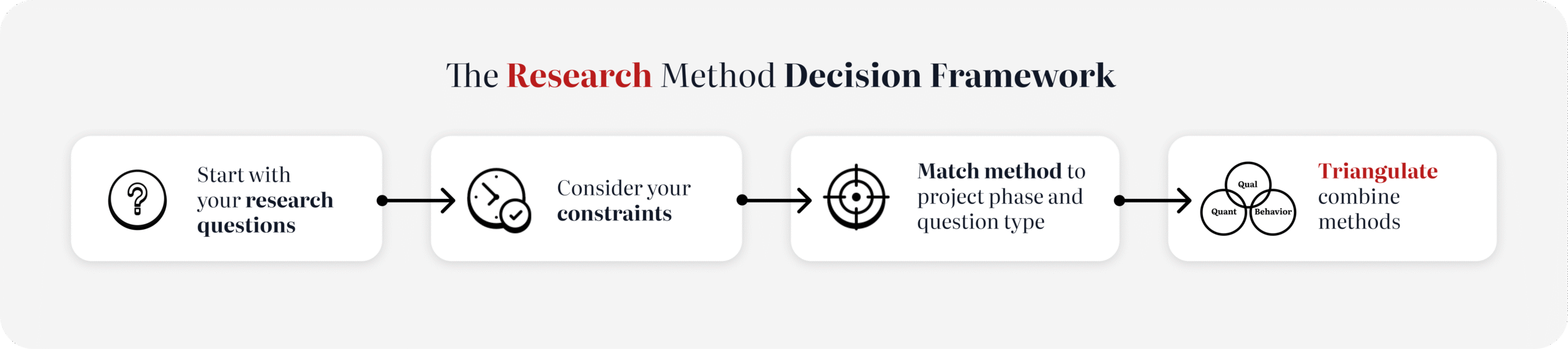

Quick Decision Framework: Method Matcher

When I’m stuck deciding which method to use, I ask myself three questions:

- What do I need to learn? (Behavior, attitudes, or both?)

- How much do I already know? (Complete unknown vs. validating assumptions)

- What resources do I have? (Time, participants, budget)

The answers usually point me in the right direction. Need to understand motivations? Qualitative. Need to measure impact? Quantitative. Need both? (The answer is usually both.) Mix them strategically.

AI Prompt for Method Selection:

I'm working on [describe project/feature]. We're at the [discover/explore/test/monitor] phase. Our primary goal is to [specific goal]. We have [timeframe] and [budget constraints]. Based on this context, recommend:

1. The 3 most appropriate research methods

2. Why each method fits our constraints

3. What we'll learn from each

4. Estimated time and participant requirements

5. Potential risks of skipping any method

This prompt has saved me hours of second-guessing. The AI suggestions aren’t gospel, but they force you to think through your constraints systematically.

Want this guide ready to use?

Download our comprehensive UX Research Methods Cheat Sheet with all methods, AI prompts, and decision frameworks in one convenient PDF ready to copy and paste

User Research Methods and Best Practices

Okay, let’s get into the actual methods. I’m not going to bore you with academic definitions – instead, I’m giving you the real-world version I use with my teams, complete with the mistakes I’ve made so you don’t have to.

Discovery Methods: Finding the Real Problem

User Interviews

The OG research method, and still one of the best when done right. Unfortunately, I’ve seen people completely botch interviews by asking leading questions or turning them into sales pitches. Here’s what actually works:

When to use: When you need deep insights into motivations, pain points, and context. Perfect for understanding the “why” behind behaviors.

Interview Best Practices From the Trenches

Following these guidelines will significantly improve your interview quality:

- Schedule 60 minutes but plan for 45 (people always go over or need bathroom breaks)

- Never ask “would you use this?” – people lie, even when they don’t mean to

- Follow the “5 whys” technique but know when to stop (after about 3 whys usually)

- Record everything with permission – your memory will fail you

- Interview at least 5 people per user segment (I aim for 7-8 to be safe)

My biggest mistake: I once interviewed only “ideal users” and missed an entire segment who were struggling. Consequently, always include edge cases and struggling users – that’s where the gold is.

AI Prompt for Interview Analysis:

I conducted [number] user interviews about [topic]. Here are the key quotes and observations: [paste interview notes]. Please:

1. Identify 5-7 major themes across all interviews

2. Highlight contradictions or tensions in what users said

3. Surface assumptions users made that might not be true

4. Suggest 3 follow-up questions I should ask in next round

5. Flag any bias in my question framing based on the notes

This prompt helps me spot patterns I might miss when I’m too close to the data. Just remember: AI finds patterns, but you interpret meaning.

Field Studies (Contextual Inquiry)

Going to where users actually work/live/struggle is uncomfortable, time-consuming, and absolutely worth it. I’ve watched users MacGyver solutions in ways we never imagined in our clean office environment.

When to use: When context matters enormously, when users can’t articulate their workflow, when you suspect workarounds exist.

Best practices:

- Be a fly on the wall first, interviewer second

- Note the environment as much as the actions (lighting, noise, interruptions)

- Take photos of workspace organization (with permission)

- Ask users to narrate what they’re doing, not why (the why comes later)

- Plan for 2-3 hours minimum per session

Reality check: Field studies are expensive and logistically annoying. But I’ve never regretted doing one. The insights always justify the effort.

Stakeholder Interviews

These aren’t user research, but they’re research nonetheless. Stakeholders have business constraints, political concerns, and assumptions that will sink your project if ignored.

When to use: At the very start, before you talk to users (so you know what questions to ask).

What to extract:

- Success metrics they actually care about (not the ones they say in public)

- Budget and timeline constraints (real ones, not aspirational)

- Political landmines and sacred cows

- Existing data or research they’re basing decisions on

- What “done” looks like to them specifically

Pro tip: Ask stakeholders to predict what users will say. Then show them when the research contradicts their predictions. Data changes minds; opinions don’t.

Exploration Methods: Designing Solutions

Card Sorting

I love card sorting for one reason: it shows you how users actually think about your content, not how you think they think about it. Furthermore, those are usually very different things.

When to use: Building or redesigning navigation, organizing content, creating taxonomies.

Understanding Card Sort Types

Two flavors:

- Open card sort: Users create their own categories (use this when you have no idea how to organize things)

- Closed card sort: Users fit cards into your predefined categories (use this to validate your structure)

Running Effective Card Sorts

Best practices:

- 30-50 cards is the sweet spot (more and people get fatigued)

- Run with at least 15 participants for reliable patterns

- Include cards users might search for but you don’t have (reveals gaps)

- Do it remote with tools like Optimal Workshop or UserZoom

- Follow up with interviews to understand the “why” behind their groupings

My mistake: I once ran a card sort with internal jargon on the cards. As a result, users had no idea what half of them meant. Use their language, not yours.

AI Prompt for Card Sort Analysis:

I ran a card sort with [number] participants organizing [number] cards. Here's the data: [paste results/similarity matrix]. Please:

1. Identify the 3-5 strongest category patterns

2. Highlight cards that participants struggled to categorize

3. Suggest 2-3 alternative organizational structures based on the data

4. Point out any categories that were too broad or too narrow

5. Recommend follow-up questions to resolve ambiguous groupings

Journey Mapping

Journey maps are powerful when they’re research-based and useless when they’re not. Unfortunately, I’ve seen too many “journey maps” that are just stakeholder fanfiction about what they hope users do.

When to use: Understanding end-to-end experiences, identifying pain points across touchpoints, building empathy with stakeholders.

What Makes a Good Journey Map

What makes a good journey map:

- Based on actual research, not assumptions (this is non-negotiable)

- Includes emotions, not just actions

- Shows both current state (“as-is”) and future state (“to-be”)

- Identifies moments of delight and moments of pain

- Links to specific improvements you can make

Journey Mapping Best Practices

Best practices:

- Start with user goals, not your product features

- Include all touchpoints, even ones you don’t control

- Add quotes from real users (builds credibility)

- Make it visual but not so pretty that nobody dares update it

- Workshop it with stakeholders – the discussion is as valuable as the artifact

AI Prompt for Journey Map Synthesis:

I have interview data from [number] users about their experience with [process/product]. Key findings: [paste themes]. Create a journey map framework with:

1. 5-7 key stages from user perspective

2. Main actions, thoughts, and emotions at each stage

3. Pain points and opportunities for improvement

4. Which stages need most urgent attention and why

5. Questions we still need to answer about the journey

Prototyping (Paper to High-Fidelity)

Prototypes aren’t just deliverables – they’re research tools. The fidelity you choose sends a message about what feedback you want.

Paper prototypes: Use when ideas are rough, you want divergent feedback, and you need to move fast. Users feel comfortable criticizing stick figures.

Low-fidelity digital: Use when testing flows and structure. Tools like Balsamiq or even Figma with gray boxes work great.

High-fidelity interactive: Use when testing visual design, microinteractions, or when you need stakeholder buy-in. Warning: people will comment on colors and ignore broken flows.

The prototype paradox: The better it looks, the less honest feedback you get. I learned this after spending a week making a pixel-perfect prototype only to have users say “it’s great!” when it clearly wasn’t. They didn’t want to hurt my feelings.

Testing Methods: Validation Time

Qualitative Usability Testing

This is my desert island research method. Moreover, if I could only do one thing for the rest of my career, this would be it. Watching users struggle with your interface is humbling, uncomfortable, and absolutely essential.

When to use: When you have something interactive to test (prototype or live product) and you need to find usability issues.

Moderated vs. Unmoderated Testing

The unmoderated vs. moderated debate:

- Moderated: You’re there (in person or remote), can ask follow-ups, can probe deeper. Best for complex tasks or when you need qualitative depth.

- Unmoderated: Users test alone, usually faster and cheaper. Best for simple tasks or when you need scale.

Generally, I do 3-5 moderated sessions first to find the big issues, then validate fixes with unmoderated testing at scale.

My Testing Protocol

My testing protocol (refined over 100+ sessions):

- Warm up (5 min): Build rapport, explain thinking aloud, emphasize there are no wrong answers

- Context questions (5 min): Understand their background without spoiling tasks

- Tasks (30-35 min): 3-5 realistic scenarios, not “click the button” instructions

- Debrief (10 min): What did they think overall, what would they change, what confused them

- Thank and compensate: Respect their time with appropriate incentive

Critical Testing Mistakes to Avoid

Critical mistakes to avoid:

- Leading them when they struggle (bite your tongue)

- Explaining features (users won’t have you there in real life)

- Testing too many things (focus on 3-5 critical flows)

- Only testing with people who love your product (get critics too)

AI Prompt for Usability Test Analysis:

I conducted [number] usability tests where participants attempted [list tasks]. Here are my session notes and observations: [paste notes]. Please:

1. Categorize issues by severity (critical/high/medium/low)

2. Identify patterns across sessions (what failed repeatedly?)

3. Prioritize top 5 issues to fix first based on frequency and impact

4. Suggest potential root causes for the most critical issues

5. Draft 3 hypothesis-driven recommendations for improvements

A/B Testing

A/B testing gets misused more than any other method. Specifically, it’s not about “which blue is better” – it’s about validating hypotheses with statistical significance.

When to use: When you have traffic, when you’ve already done qualitative research, when you’re optimizing not exploring.

What Makes a Good A/B Test

What makes a good A/B test:

- Clear hypothesis (“Changing X will improve Y because Z”)

- One variable at a time (usually – multivariate needs huge traffic)

- Predetermined sample size and confidence level

- Sufficient runtime (1-2 full weeks minimum for most products)

- Success metrics defined upfront

When NOT to A/B Test

When NOT to A/B test:

- Low traffic situations (you’ll never reach significance)

- Fundamental UX problems (fix the broken experience first)

- When you’re just curious (have a hypothesis or don’t bother)

Reality check: Most A/B tests show no significant difference. However, that’s fine. That’s data. Don’t keep testing until you get the answer you want.

Monitoring Methods: Post-Launch Reality

Analytics Review

Analytics tell you what happened. However, they don’t tell you why. That’s the trap – people look at a drop-off and make assumptions instead of following up with qualitative research.

Key Metrics to Track

What to track:

- Task completion rates (not page views)

- Time to complete key workflows (not time on site)

- Error rates and form abandonment (where people give up)

- Feature adoption and engagement (what actually gets used)

- Funnel conversion and drop-off points (where you’re losing people)

My Analytics Analysis Ritual

My analysis ritual:

- Set up a baseline before any changes

- Define what “success” looks like numerically

- Track weekly, act on monthly trends (not daily noise)

- Segment by user type (power users behave differently)

- When something weird happens, investigate immediately

AI Prompt for Analytics Interpretation:

Here's my analytics data for [product/feature] over [timeframe]: [paste key metrics with trends]. Context: [any recent changes or events]. Please:

1. Identify the 3 most significant trends or anomalies

2. Generate hypotheses for what might be causing these patterns

3. Suggest which metrics to correlate to test hypotheses

4. Recommend qualitative research to understand the "why"

5. Flag any potential data quality or tracking issues

Support Ticket Analysis

Your support team knows what’s broken better than anyone. I learned this after ignoring support feedback for months, then discovering the #1 ticket was about a feature we thought was working perfectly.

Best practices:

- Tag tickets by feature/area (make this easy for support to do)

- Review top 10 issues monthly (not just “urgent” tickets)

- Join support for a day quarterly (humbling and educational)

- Track how design changes impact ticket volume

- Build feedback loops from support to product

What Are Quantitative UX Research Methods

Here’s where people get confused: quantitative doesn’t just mean “analytics.” Rather, it means any method that gives you measurable, statistically significant data. Think numbers, not narratives.

I used to think quantitative research was just for data scientists, not designers. Then I ran a usability test with 50 people (instead of my usual 5) and discovered patterns that completely contradicted what I learned from the small sample. Ultimately, that was my wake-up call about the power of scale.

The Quantitative Toolkit

Surveys – Ask structured questions, get measurable answers. Best when you need to measure attitudes or gather feedback at scale. Typically, I use them after qualitative research to validate if what I heard from 10 users applies to 1,000.

Analytics – Track actual behavior in your product. Shows you what users do, not what they say they do. The numbers don’t lie, but they don’t tell the whole story either.

A/B Testing – Compare two versions statistically. Perfect for optimization, terrible for exploration. Run it when you have traffic and a clear hypothesis.

Clickstream Analysis – Follow the path users take through your product. Reveals navigation patterns and common workflows you didn’t anticipate.

Heatmaps – Visual representation of where users click, scroll, and spend time. Great for understanding attention and interaction patterns on specific pages.

Benchmark Testing – Measure task success, time, and errors numerically. Compare before and after redesigns to quantify improvement.

Tree Testing – Quantitative method for testing information architecture. Shows you mathematically how findable your content is.

When Quantitative Methods Shine

Use quantitative research when you need to:

- Validate findings from qualitative research at scale

- Make data-driven decisions that require statistical proof

- Measure the impact of design changes objectively

- Track trends over time with consistent metrics

- Prioritize issues based on frequency and impact

- Prove ROI to stakeholders (they love numbers)

The trap: Quantitative data feels authoritative, so people trust it even when it’s wrong. Therefore, always validate your tracking implementation, check for sampling bias, and don’t make causal claims from correlational data.

| Method | Sample Size | Time Investment | When to Use | Primary Output |

|---|---|---|---|---|

| Surveys | 100+ for reliability | 1-2 weeks | Validate attitudes/preferences at scale | Structured data, percentages, ratings |

| Analytics | Depends on traffic | Ongoing | Track actual behavior continuously | Metrics, funnels, trends |

| A/B Testing | Calculated per test | 1-2+ weeks | Optimize specific elements with traffic | Statistical significance, winner |

| Heatmaps | 100+ sessions | Continuous | Understand interaction patterns on pages | Visual attention maps |

| Benchmark Testing | 20+ per condition | 1-2 weeks | Measure task performance numerically | Success rates, time, errors |

| Tree Testing | 50+ participants | 1 week | Validate information architecture findability | Success metrics, paths taken |

AI Prompt for Quantitative Analysis:

I have quantitative data from [method] with [sample size] participants/sessions. Data: [paste numbers/metrics]. Please:

1. Calculate statistical significance if applicable (p-value, confidence intervals)

2. Identify outliers or anomalies that warrant investigation

3. Suggest appropriate visualizations for stakeholder presentation

4. Recommend follow-up tests to validate or expand findings

5. Point out any methodological concerns or limitations

Quantitative vs. Qualitative: The Forever Debate

Look, this debate is exhausting. You need both. Nevertheless, here’s how I think about it after years of fighting this battle:

Understanding Qualitative Research

Qualitative answers “why” and “how”:

- Gives you rich context and user stories

- Reveals motivations and mental models

- Uncovers unexpected insights

- Works with small samples (5-8 users per segment)

- Generates hypotheses to test quantitatively

Understanding Quantitative Research

Quantitative answers “what” and “how many”:

- Provides statistical proof and scale validation

- Measures impact and trends over time

- Prioritizes issues by frequency

- Requires larger samples for reliability

- Tests hypotheses from qualitative research

The Magic of Combining Both

The magic happens when you combine them. Specifically, I do qualitative research to understand the problem deeply, then quantitative to validate that the problem (and solution) applies broadly. Repeat this cycle and you’ll build products people actually love.

How to Choose UX Research Methods

This is the question I get asked most by junior designers: “Which method should I use?” And my answer always frustrates them: “It depends.” However, here’s the framework that makes “it depends” actually useful.

The Research Method Decision Framework

I’ve made every mistake possible in method selection – running surveys when I needed interviews, doing usability tests when I needed field studies, wasting weeks on research that answered the wrong question. Consequently, after enough expensive failures, I developed a framework that actually works.

Start with Your Research Questions

Before you pick a method, get crystal clear on what you need to learn. Not what would be “nice to know” – what do you actually need to make your next decision?

Bad research question: “What do users think about our product?” Good research question: “What prevents users from completing onboarding in their first session?”

Importantly, the specific question points you to the right method. The vague question leads to vague research that helps nobody.

Consider Your Constraints (The Honest Version)

Every blog post pretends you have unlimited time and budget. You don’t. I don’t. Nobody does. Therefore, let’s be real about constraints:

Time:

- 1 week or less: Analytics review, guerrilla testing, surveys, unmoderated tests

- 2-4 weeks: User interviews, moderated usability tests, card sorting, diary studies

- 4+ weeks: Field studies, longitudinal studies, comprehensive competitive analysis

Budget:

- Shoestring ($0-$500): Analytics, guerrilla testing, remote unmoderated, surveys with your own users

- Modest ($500-$5k): Recruiting incentives for 5-10 interviews or tests, basic tools

- Healthy ($5k+): Professional recruiting, lab facilities, multiple methods combined

Access to users:

- Easy access: Current users, internal stakeholders, guerrilla testing

- Moderate access: Recruiting via social media, user groups, customer lists

- Difficult access: B2B, enterprise, highly specialized users (pay more, wait longer)

Match Method to Project Phase and Question Type

Here’s my mental model for this, built from real projects:

| Your Question | Project Phase | Best Method | Why This Works | Sample Size |

|---|---|---|---|---|

| “What problem should we solve?” | Discover | User interviews, field studies | Deep context, uncovers unknowns | 5-8 per segment |

| “How do users currently solve this?” | Discover | Contextual inquiry, diary studies | Real behavior in real context | 5-10 users |

| “Who are we designing for?” | Discover | Interviews + surveys | Depth + scale validation | 8+ interviews, 100+ surveys |

| “How should we organize content?” | Explore | Card sorting, tree testing | Reveals mental models | 15+ participants |

| “Will users understand this concept?” | Explore | Prototype testing, concept validation | Quick directional feedback | 5-8 users |

| “Does this design work?” | Test | Usability testing (moderated) | Observes actual struggles | 5-8 per major variant |

| “Which version performs better?” | Test | A/B testing, benchmark testing | Statistical validation | Calculated per test |

| “Is it accessible?” | Test | Accessibility audit + testing | Compliance + real user feedback | 3-5 assistive tech users |

| “What’s breaking in production?” | Monitor | Analytics, heatmaps, support tickets | Real usage patterns | All users |

| “Are users satisfied?” | Monitor | Surveys, NPS, satisfaction ratings | Sentiment at scale | 100+ responses |

The “When in Doubt” Rules

After years of this, I’ve developed some shortcuts for when I’m genuinely stuck:

If you’re starting a new project: Do user interviews first. Always. They’re versatile, relatively quick, and prevent you from building the wrong thing.

If you have something to test: Do usability testing. Watch people use it. This never wastes your time.

If stakeholders need convincing: Get quantitative data. Numbers change minds when stories don’t.

If you can only do one thing: For existing products, do usability testing. For new products, do user interviews. These have the highest ROI.

If you’re completely lost: Start with analytics and stakeholder interviews to understand what’s already known, then decide what gaps exist.

Real-World Method Selection Examples

Let me show you how this works in practice with projects I’ve actually done:

Example 1: E-commerce Checkout Redesign

- Question: “Why are 45% of users abandoning cart?”

- Phase: Monitor (problem identified) → Discover (understanding why)

- Methods chosen: Analytics review (identified drop-off points) → Session recordings (saw what users did) → User interviews (understood motivations) → Usability testing (validated new checkout flow)

- Why this sequence: Started with data to find the problem, used qualitative to understand it, tested the solution

- Time: 6 weeks total

- Outcome: Reduced abandonment by 18%

Example 2: New AI Feature Concept

- Question: “Will users understand and trust this AI recommendation engine?”

- Phase: Explore (validating concept) → Test (refining design)

- Methods chosen: Concept testing with low-fi prototypes → User interviews about trust and AI expectations → High-fidelity prototype testing

- Why this sequence: Validated concept before investing in design, understood trust factors, tested final experience

- Time: 8 weeks

- Outcome: Pivoted concept based on trust concerns, launched successfully

Example 3: B2B Dashboard Information Architecture

- Question: “How should we organize 50+ features so users can find what they need?”

- Phase: Explore (defining structure)

- Methods chosen: Card sorting (open, then closed) → Tree testing (validating structure) → First-click testing (validating navigation)

- Why this sequence: Let users create categories, validated our interpretation, tested the final design

- Time: 4 weeks

- Outcome: Improved findability by 40% vs. old design

AI Prompt for Method Selection:

Help me choose research methods for this project:

- Project: [brief description]

- Research question: [what you need to learn]

- Phase: [discover/explore/test/monitor]

- Constraints: [timeline], [budget], [access to users]

- Stakeholder needs: [what they need to see/hear]

Please recommend:

1. Primary method with justification

2. Secondary method to complement/validate

3. Minimum viable approach if constrained

4. How to sequence these methods

5. Expected insights from each method

6. Estimated timeline and participant needs

The Method Combination Strategy

Here’s something that took me years to figure out: the best research uses multiple methods to triangulate. Specifically, one method gives you a perspective; two methods give you depth; three methods give you confidence.

Recommended Method Combinations

My go-to combinations:

For Discovery:

- Interviews (depth) + Surveys (scale) + Analytics (behavior)

- Stakeholder interviews (constraints) + User interviews (needs) + Competitive analysis (landscape)

For Exploration:

- Card sorting (structure) + Prototype testing (validation) + Journey mapping (context)

- Design review (expert evaluation) + Paper prototypes (quick iteration) + Concept testing (direction)

For Testing:

- Usability testing (qualitative) + Analytics (quantitative) + Surveys (sentiment)

- Benchmark testing (before/after) + Accessibility audit (compliance) + User testing (real experience)

For Monitoring:

- Analytics (what) + Support tickets (pain) + Surveys (why)

- Heatmaps (behavior) + Session recordings (context) + NPS (satisfaction)

The Triangulation Principle

The key is using methods that answer different aspects of the same question. In other words, don’t use five methods that all tell you the same thing.

How Many UX Research Methods Are There

Honest answer? It depends on how you count. Are personas a method or a deliverable? Is guerrilla testing different enough from usability testing to count separately? Different frameworks organize this differently.

However, here’s what actually matters: there are enough methods that you’ll never master them all, and few enough that you can build a solid toolkit in a few years. I’ve been doing this for over a decade, and I probably use 10-12 methods regularly, another 10 occasionally, and the rest I’ve tried once or filed under “interesting but impractical.”

The Core UX Research Methods Landscape

Let me organize this the way I think about it – by the type of insight each method produces, not by arbitrary categories:

Methods for Understanding Current Behavior (8 methods):

- User interviews

- Field studies / Contextual inquiry

- Diary studies

- Ethnographic research

- Shadowing

- Analytics review

- Session recordings / Heatmaps

- Support ticket analysis

Methods for Understanding Mental Models (6 methods):

- Card sorting

- Tree testing

- Interviews focused on workflow

- Mental model diagrams

- First-click testing

- Task analysis

Methods for Evaluating Designs (7 methods):

- Usability testing (moderated)

- Usability testing (unmoderated)

- Accessibility audits

- Heuristic evaluation

- Cognitive walkthrough

- Expert review

- Benchmark testing

Methods for Comparing Options (5 methods):

- A/B testing

- Preference testing

- Competitive analysis

- Multivariate testing

- Concept testing

Methods for Measuring Attitudes & Satisfaction (6 methods):

- Surveys

- NPS (Net Promoter Score)

- CSAT (Customer Satisfaction)

- SUS (System Usability Scale)

- Sentiment analysis

- Review analysis

Methods for Generating Ideas (5 methods):

- Brainstorming with users

- Co-design workshops

- Focus groups

- Design sprints with user input

- Participatory design

Methods for Planning & Prioritization (5 methods):

- Stakeholder interviews

- Requirements gathering

- Journey mapping

- Persona development

- Jobs-to-be-done analysis

That’s 42 methods by my count, but honestly the number doesn’t matter. What matters is building fluency with the 10-12 that handle 90% of situations.

The Methods I Actually Use (The Real Talk Version)

In my last 20 projects, here’s what I actually did:

Used on almost every project (my core 7):

- User interviews

- Analytics review

- Usability testing (moderated)

- Surveys

- Competitive analysis

- Prototype testing

- Stakeholder interviews

Used frequently when needed (my extended toolkit): 8. Card sorting 9. Journey mapping 10. Heuristic evaluation 11. A/B testing 12. Session recordings/heatmaps 13. Accessibility testing

Used occasionally (special situations): 14. Field studies 15. Diary studies 16. Tree testing 17. Benchmark testing 18. First-click testing

Tried but rarely use (either too expensive, too time-consuming, or not enough ROI):

- Ethnographic research (too long)

- Focus groups (groupthink kills honesty)

- Eye tracking (expensive, rarely changes decisions)

- Longitudinal studies (timeline incompatible with most projects)

| Method Category | Methods Included | When I Use Them | Typical Sample | Time Investment |

|---|---|---|---|---|

| Discovery | Interviews, field studies, stakeholder interviews, analytics | Start of project, validating problems | 5-8 per segment | 2-4 weeks |

| Mental Models | Card sorting, tree testing, task analysis | Organizing content/features | 15-30 participants | 1-2 weeks |

| Design Evaluation | Usability testing, accessibility audits, heuristic evaluation | Iterative design cycles | 5-8 per cycle | 1-2 weeks per cycle |

| Comparison | A/B testing, preference testing, competitive analysis | Optimization decisions | Varies widely | 2-4+ weeks |

| Measurement | Surveys, NPS, CSAT, analytics | Continuous monitoring | 100+ for surveys | Ongoing |

| Ideation | Co-design, brainstorming, workshops | Early exploration | 5-10 participants | 1-2 days |

Method Evolution: What’s Changed Since 2020

The fundamentals haven’t changed, but how we execute them has transformed:

Remote everything: What used to require flying to users or bringing them to a lab now happens over Zoom. This democratized research but also made it harder to observe context.

AI-assisted analysis: What used to take me a week of coding interview transcripts now takes a day with AI help. But (and this is crucial) the interpretation still needs human judgment.

Tool sophistication: Unmoderated testing platforms got good enough to trust. Figma made prototype testing accessible. Analytics tools got smarter about tracking user flows.

Accessibility focus: It’s no longer optional. Every project needs accessibility testing, and tools like axe DevTools make it easier.

Speed expectations: Stakeholders want answers faster. Two-week sprints mean we need methods that fit that timeline.

What hasn’t changed: Users still lie in surveys, still struggle with bad interfaces, and still appreciate when someone actually cares about their experience.

What Are Some Examples of Qualitative Research Methods in UI/UX Design

Qualitative research is where the magic happens – it’s where you discover not just what users do, but why they do it, what they’re thinking, what frustrates them, and what delights them. Essentially, numbers tell you “what,” but qualitative methods tell you “why.”

I learned the hard way that you can’t skip qualitative research. Early in my career, I optimized a form based purely on analytics. Completion rates went up 5%, so I declared victory. Then I actually talked to users and discovered I’d made the experience more frustrating – they were just pushing through out of necessity. That’s the difference: quantitative data said “success,” qualitative research said “better, but still painful.”

Deep Dive: Essential Qualitative Methods

User Interviews (The Foundation)

This is where it all starts. Notably, a good interview uncovers insights you didn’t know to look for. A bad interview confirms your existing biases and wastes everyone’s time.

What makes it qualitative: You’re capturing rich, contextual data about behaviors, motivations, pain points, and emotions. The insights come from the nuances, not the numbers.

My Interview Approach (Refined Over 200+ Interviews)

Preparation:

- Write a discussion guide, not a script

- Plan 5-7 open-ended questions (you’ll only get through 3-4)

- Prepare follow-up probes for each question

- Test your questions on a colleague first

- Schedule 60 minutes, plan for 45

During the interview:

- Start with easy context questions to build rapport

- Ask about past behavior, not hypothetical futures

- Use silence strategically (let them think)

- Follow interesting tangents (some of my best insights came from “oh, one more thing…” moments)

- Take notes even if recording (forces active listening)

Analysis:

- Transcribe if possible (AI makes this cheap now)

- Code for themes across interviews

- Look for patterns AND contradictions

- Pull compelling quotes for stakeholders

- Synthesize into insights, not just findings

Common Interview Mistakes

Common mistakes I see:

- Asking leading questions (“Don’t you think X would be better?”)

- Interviewing only fans (talk to critics and former users too)

- Taking responses at face value (probe deeper)

- Asking about features instead of problems

- Trying to “solve” during the interview (you’re there to listen)

Questions That Actually Work

Example questions that work:

- “Walk me through the last time you [relevant task]. What were you trying to accomplish?”

- “What’s the most frustrating part of [process]? Tell me about a specific time that happened.”

- “If you could wave a magic wand and change one thing about [product/experience], what would it be and why?”

- “What workarounds or shortcuts have you developed? Show me.”

- “What almost stopped you from [important action]? What made you continue?”

AI Prompt for Interview Guide Creation:

I'm planning user interviews for [product/feature]. My research questions are: [list 2-3 questions]. My target users are [description]. Please create:

1. A discussion guide with 5-7 main questions

2. 2-3 follow-up probes for each question

3. Suggested opening context questions

4. Wrap-up questions to capture anything I missed

5. Questions to avoid (leading, hypothetical, or biased)

AI Prompt for Interview Synthesis:

I interviewed [number] users about [topic]. Here are the raw notes/transcripts: [paste content]. Please:

1. Identify 5-7 major themes with supporting quotes

2. Highlight surprising or contradictory findings

3. Create a priority matrix of pain points by frequency and severity

4. Suggest user archetypes based on patterns

5. List unanswered questions for follow-up research

Usability Testing (Watching Users Struggle)

I put this under qualitative because while you can count task success, the real value is observing where users struggle and understanding why.

What makes it qualitative: You’re watching behavior in context, capturing think-aloud commentary, and understanding the user’s mental model in real-time. The numbers (success rate, time on task) are useful, but the observations are gold.

My Testing Setup

My testing setup:

- 5-8 participants per major user segment

- 3-5 realistic tasks (not “click here” instructions)

- 45-60 minute sessions

- Think-aloud protocol (awkward but essential)

- Record screen and face if possible

- Take extensive notes (AI can help, but don’t rely only on recordings)

What to Look For During Tests

What I’m looking for:

- Where do users pause or hesitate?

- What do they say out loud vs. what they do?

- What do they expect to happen that doesn’t?

- Where do they create workarounds?

- What delights or frustrates them?

- What vocabulary do they use?

Essential Moderator Skills

The moderator skills nobody teaches you:

- Don’t help when they struggle (this is the hardest part)

- Use neutral phrases: “What are you thinking?” not “Does that make sense?”

- Stay quiet during tasks (your silence is data)

- Probe after, not during (“I noticed you hesitated there – what were you thinking?”)

- Watch body language and vocal tone

- End gracefully even if it goes badly

Real Testing Example

Real example from a recent test: Task: “Find out how to return a product you ordered.” What happened: 4 out of 5 users clicked the Help icon, then got frustrated with the chatbot, then gave up and called customer service. The quantitative data: 20% task success rate The qualitative insight: Users didn’t trust the returns policy in footer because it was in legal language. They wanted a simple “Start a return” button on their order history. Chatbot made things worse by giving generic responses.

See the difference? Numbers told me it was broken. Observations told me how to fix it.

Field Studies / Contextual Inquiry (Going to Their World)

This is qualitative research on expert mode. Essentially, you’re going to where users actually work/live/struggle to observe them in their natural environment.

What makes it qualitative: Context is everything. You’re capturing environmental factors, workarounds, interruptions, and cultural factors you’d never discover in a lab.

When Field Studies Are Worth It

When it’s worth the investment:

- Enterprise B2B products (users can’t explain their workflow out of context)

- Healthcare (environment dramatically affects usage)

- Physical products (usage context matters enormously)

- When users have developed complex workarounds

- When you suspect the “happy path” doesn’t exist in reality

My Field Study Approach

My field study approach:

- Observe first, interview second (don’t interrupt natural workflow)

- Take photos of workspace, tools, workarounds (with permission)

- Note everything: lighting, noise, interruptions, physical constraints

- Ask users to narrate what they’re doing in real-time

- Stay 2-3 hours minimum (workflows aren’t always immediate)

- Follow up after to ask about what you observed

Unexpected Field Study Insights

What I learned from field studies that I never would have discovered otherwise:

- Hospital nurses don’t use the “efficient” workflow we designed because they’re interrupted every 3 minutes – they need a system that saves state constantly

- Construction managers use printed screenshots taped to clipboards because tablets die in direct sunlight – our tablet-first design was useless

- Teachers can’t use our collaboration tool during class because school wifi blocks external services – we needed an offline mode

The investment: Field studies are expensive (travel, time, logistics) and exhausting (being “on” for hours observing). However, when you need deep understanding of context, nothing else comes close.

AI Prompt for Field Study Analysis:

I conducted [number] field studies observing [user type] performing [activities]. Key observations: [paste detailed notes]. Please:

1. Identify environmental factors affecting user behavior

2. Surface workarounds users have developed and why

3. Highlight differences between stated workflow and actual behavior

4. Suggest design implications based on observed constraints

5. Recommend follow-up questions to validate interpretations

Diary Studies (Longitudinal Insights)

Getting users to document their experiences over days or weeks reveals patterns you’d never catch in a one-hour interview.

What makes it qualitative: You’re capturing real experiences as they happen, with emotional context and temporal patterns. Users record their thoughts, frustrations, and successes in the moment.

Best for:

- Understanding frequency and patterns of use

- Capturing experiences that happen irregularly

- Studying decision-making processes over time

- Understanding emotional journey and relationship with product

My diary study setup:

- 5-10 participants (more is better but diminishing returns)

- 1-2 weeks duration (sweet spot for most products)

- Daily or event-based prompts (depends on use case)

- Mobile-friendly entry method (text, photo, voice)

- Follow-up interview after to discuss patterns

Prompt examples:

- “Each time you use [product] today, tell us: What were you trying to do? Did it work? How did you feel?”

- “At the end of each day: What task related to [domain] did you struggle with most? Why?”

- “When you almost gave up on [task], take a photo/screenshot and tell us what happened.”

Reality check: Compliance is always an issue. Even with incentives, expect some participants to forget or drop off. Build that into your sample size.

Think-Aloud Protocol (Accessing the Inner Monologue)

This isn’t a separate method – it’s a technique used during usability testing, prototype testing, or cognitive walkthroughs. But it’s so important it deserves its own section.

What it is: Asking users to verbalize their thoughts, reactions, and decision-making as they complete tasks. It’s weird and uncomfortable, but incredibly revealing.

Why it works: Users say things like “I’m looking for something that says ‘help’… I guess ‘support’ is the same thing… wait, or maybe it’s under ‘account’… okay I’m lost.” That stream-of-consciousness reveals their mental model, expectations, and confusion in real-time.

How to coach it:

- Demonstrate with an example (I often have them talk through making toast)

- Remind them there are no wrong answers

- Encourage even “obvious” observations

- When they go quiet: “What are you thinking right now?”

- Don’t respond to their questions – redirect: “What would you try next?”

The awkwardness factor: Most users aren’t natural think-alouders. They’ll do it for 30 seconds, forget, and go silent. Gentle reminders help, but some people just can’t maintain it. That’s okay – their actions still tell you a lot.

When to Choose Qualitative Over Quantitative

Choose qualitative methods when you need to:

- Understand motivations, not just behaviors

- Discover problems you don’t know exist yet

- Get depth over breadth (5 revealing interviews > 500 survey responses)

- Capture emotional and contextual factors

- Generate hypotheses for quantitative validation

- Understand the “why” behind quantitative trends

Qualitative research is best for:

- Early discovery (you don’t know what you don’t know)

- Understanding complex decision-making

- Designing for emotion and trust

- Uncovering workarounds and pain points

- Building empathy with stakeholders

The limitation: You can’t generalize from small samples. What you learn from 8 interviews might not apply to all users. That’s why you follow qualitative research with quantitative validation when it matters.

| Method | Best For | Sample Size | Typical Duration | Key Insights |

|---|---|---|---|---|

| User Interviews | Understanding motivations, pain points, context | 5-8 per segment | 45-60 min each | Why users behave as they do, unmet needs |

| Usability Testing | Identifying interface issues, observing struggles | 5-8 per variant | 45-60 min each | Where designs break, mental models |

| Field Studies | Contextual factors, real-world constraints | 5-10 observations | 2-3 hours each | Environmental impact, workarounds |

| Diary Studies | Patterns over time, emotional journey | 5-10 participants | 1-2 weeks | Frequency, triggers, longitudinal experience |

| Think-Aloud | Real-time thought process, expectations | Same as usability test | Ongoing during task | Mental models, decision-making logic |

Combining Qualitative Methods for Deeper Insight

The strongest research uses multiple qualitative methods together:

Example combo 1: Understanding adoption barriers

- Stakeholder interviews (business perspective)

- User interviews (user perspective)

- Field studies (real-world constraints)

- Result: Triangulated understanding from multiple angles

Example combo 2: Redesigning core workflow

- Usability testing on current product (identify issues)

- User interviews (understand workarounds and goals)

- Usability testing on new prototype (validate improvements)

- Result: Problems identified, solutions validated

The power of triangulation: When three different qualitative methods all point to the same insight, you can be confident it’s real even without large sample sizes.

AI Prompt for Qualitative Research Synthesis:

I used multiple qualitative methods: [list methods and sample sizes]. Here are the key findings from each: [paste organized notes]. Please:

1. Identify themes that appear across multiple methods

2. Highlight contradictions between methods and hypotheses for why

3. Assess confidence level for each finding (multiple sources = higher confidence)

4. Suggest which findings need quantitative validation

5. Create a narrative synthesis connecting all insights

Frequently Asked Questions

How much does UX research cost?

It varies wildly based on method and whether you DIY or hire an agency. For small projects, you can do basic research for under $1k (participant incentives + tools). Mid-size projects with recruiting and analysis run $5k-$20k. Comprehensive research programs for major products can be $50k-$200k+. My advice: start small with the highest-ROI methods (interviews and usability testing) and scale up as you prove value.

Can I do UX research with no budget?

Absolutely. I’ve done some of my best research with zero budget. Guerrilla testing, internal users, friends/family as proxy users, free tools, and analyzing existing data all cost nothing but time. The constraint forces creativity. Just be transparent about limitations and don’t generalize too far from convenience samples.

How many participants do I need for usability testing?

The famous “5 users finds 85% of issues” is generally true for simple interfaces with a single user type. But for complex products or multiple user segments, you need more. I typically do 5-8 per distinct user segment. Want statistical significance? You need 20+ per condition. The real answer: test with as many as you can afford, but 5 is better than 0.

What’s the difference between UX research and market research?

Market research asks “will people buy this?” and “what’s the market opportunity?” UX research asks “can people use this?” and “does this solve their problem?” Market research focuses on market size, segmentation, and positioning. UX research focuses on usability, user needs, and product experience. You need both, but they answer different questions. Market research happens before you build; UX research happens while you build.

When should I do research vs. just talk to users?

Talking to users” IS research – informal research, but still research. The difference is structure and rigor. Casual conversations with users are great for continuous discovery and relationship building. Formal research is needed when you’re making big decisions, need to convince stakeholders, or need to understand patterns across many users. Do both. Regular informal contact keeps you close to users; structured research gives you confidence to make big bets.

Can AI replace UX researchers?

No, and it won’t anytime soon. AI can help analyze data, spot patterns, generate hypotheses, and draft interview guides. But it can’t build rapport with users, pick up on subtle emotional cues, make ethical judgment calls, or synthesize insights into strategic design direction. AI is an amazing research assistant, but the researcher role requires empathy, creativity, and strategic thinking that AI doesn’t have. Use AI to speed up the tedious parts so you can focus on the human parts.

How do I convince stakeholders to invest in UX research?

Stop talking about “research” and start talking about “risk reduction.” Frame it as: “We can spend $10k on research now, or risk wasting $200k building something nobody wants.” Show them the cost of their last failed project. Share a competitor who succeeded with research-driven design. Start small – do one quick study, show the insights, demonstrate ROI. Make research visible by sharing insights company-wide. Invite stakeholders to observe sessions (watching users struggle changes minds fast). Most importantly: connect research directly to business metrics they care about.

What’s the most impactful research method?

Usability testing, hands down. Watching someone struggle with your interface is humbling and immediately actionable. It’s also one of the easiest methods to learn and execute.

Leave a Reply